upload.js

The day after dConstruct, this year, the third IndieWebCampUK event was held at the Clearleft offices in Brighton. I only managed to make it to the tail end of the first day, having taken all of that morning and most of that afternoon to nurse a hangover and spend time with friends who I had not seen in a long time.

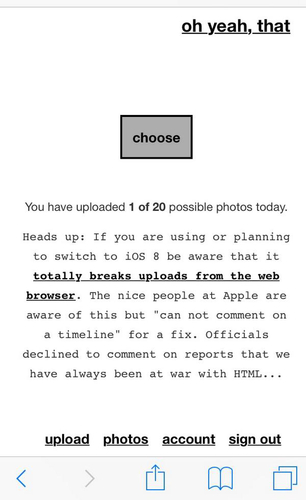

As luck would have it I arrived in time for Jeremy to lead a session on photos. During the conversation he touched on the fact that web browsers on modern phones and tablets will trigger the camera when a user invokes the <input type="file" /> element. This is how things like oh yeah, that work and I mentioned that I've been thinking about tweaking the code that does the actual uploading of a file to first write the data to browser's local cache, or storage, and then transfer the file in the background.

This is useful because it offers a fail-safe for uploads that don't succeed, typically because a network connection times out. That kind of failure is simply inconvenint if you're uploading photos from the phone's gallery but actually a problem if you've uploading directly from the camera because (at least under iOS) copies of the photo are not written to disk so anything but success means those photos vanish in to thin air. I think the cool kids call this ephemeral

. It also allows you do Instagram-style trickery by telling the user that something has been uploaded much faster than it has because... well, it hasn't been yet.

I've been trying some of these approaches with projects like dogeared using the browser's localStorage APIs and it all works fine except that, again under iOS at least, there is a hard cap on the amount of data you can cache and it turns out it doesn't take long to reach that limit when you're saving the full text of large PDF documents to your phone. I mentioned all of this to Dietrich Ayala the other day pointing out that the problem with my clever plan for pre-caching photos is that you'd only be able to store one or two photos before the whole thing failed.

Use localForage instead

, he said. It wraps all of the various storage APIs across platforms and in particular the ones that have a size limit that can expand and contract as needed. It's also asynchronous so reading and writing to disk won't block the execution of the main UI.

I sat down last night and decided to spend some time trying to make it work. I didn't have high hopes of getting anything working on the first pass but then a funny thing happened: It worked!

What follows is a step-by-step annotation of the code I wrote. It's not the most elegant code and I'm sure there's lots of room for improvement but it works, for me at least. I've also included a copy of the raw source code here and as a gist on the GitHub if anyone would like to submit patches or gentle cluebats.

This is the basic setup. Here we define a callback for when the <form> element with ID upload-form is submitted. Once invoked we grab the upload which is returned as a File thing-y. Careful readers will note the use of jQuery. There is nothing jQuery-specific about any of this but I haven't gotten around to generalizing it out of the code.

function upload_init(){

$("#upload-form").submit(function(){ // hello, jQuery

try {

var photos = $("#photo");

var files = photos = photos[0].files;

var file = files[0];

This is the important bit. This is where we take the data in the upload and convert it to a data URI and store it to the cache. We create a unique key for the upload using a hash of the current time and then invoke the localforage.setItem command. Well, actually all we're doing is defining something to happen once the the File thing-y (the upload) has been read (as a DataURL) by the FileReader. Because... computers. Just go with it.

var reader = new FileReader();

reader.onload = function(evt){

var data_uri = evt.target.result;

var dt = new Date();

var pending_id = window.btoa(dt.toISOString());

var key = "upload_" + pending_id;

localforage.setItem(key, data_uri, function(rsp){

upload_process_pending(pending_id);

});

};

reader.readAsDataURL(file)

}

This is just a bunch of boring but necessary code to trap any errors that might occur above.

catch(e){

console.log("Hrm, there was a problem uploading your photo.", e);

return false;

}

return false;

});

See this? Note that we are still inside the upload_init() function. Before we exit we're going to first clear any I am processing an upload

flags and set up a timer to watch for anything new that's been added to the cache and needs to be processed.

upload_clear_processing();

setInterval(upload_process_pending, 60000); // adjust to taste

}

We are finally done with the upload_init() function and the following are two helper functions to iterate over all the localForage keys looking specific patterns and performing a corresponding action: Delete anything starting with processing_ or pass anything starting with upload_ to the upload_process_pending_id() function, below.

function upload_clear_processing(){

var re = /processing_(.*)/;

localforage.keys(function(rsp){

var count = rsp.length;

for (var i=0; i > count; i++){

var m = rsp[i].match(re);

if (m){

localforage.removeItem(m[0], function(){

console.log("unset " + m[0]);

});

}

}

});

}

function upload_process_pending(){

var re = /upload_(.*)/;

localforage.keys(function(rsp){

var count = rsp.length;

for (var i=0; i < count; i++){

var m = rsp[i].match(re);

if (m){

console.log("process pending id " + m[1]);

upload_process_pending_id(m[1]);

}

}

});

}

This is where we start uploading files. Almost.

function upload_process_pending_id(pending_id){

var processing_key = "processing_" + pending_id;

var upload_key = "upload_" + pending_id;

console.log("process id: " + processing_key);

console.log("process id: " + upload_key);

The first thing we do is check to see whether the current ID/key is in the process of being uploaded. If it is we can move on to other things. Because localForage is asychronous, however, we have to nest that logic inside of a callback to the localforage.getItem command.

localforage.getItem(processing_key, function(rsp){

if (rsp){

console.log("got processing key, so skipping");

return;

}

It gets better. If we've gotten this far we've established that no one else is processing the current ID/key so the next thing we need to do is set a flag indicating that we are. Which, like everything else, has to happen asynchronously. You can see where this is going, right?

var dt = new Date();

var ts = dt.getTime();

localforage.setItem(processing_key, ts, function(rsp){

console.log("set " + processing_key + ", to " + ts);

Once that's done, we need to retrieve the cached data for the current ID/key and now we have callbacks to localForage commands nested three deep...

localforage.getItem(upload_key, function(data_uri){

console.log("got data uri for " + upload_key);

But whatever, it works. So now we need to convert the cached data URI back in to a File thing-y or, more specifically... a Blob. Honestly, I don't understand either. I'm willing to believe that it's all internally consistent technically but the documentation remains a bit lacking. Anyway, either it works and we hand everything off to the upload_do_upload() function or we remove the processing_ flag on the key and hope that it will simply work the next time its invoked. This is not ideal but you get the idea. Also: I've not included the code to convert a data URI to a Blob object in this blog post but it is included with a copy of the source code.

try {

var blob = upload_data_uri_to_blob(data_uri);

}

catch(e){

console.log("failed to create a blob for " + upload_key + ", because " + e);

localforage.removeItem(processing_key, function(){});

return false;

}

upload_do_upload(blob, pending_id);

Close all the callbacks because... Original Sin?

});

});

});

}

This is where the damn photo is finally uploaded. There's nothing fancy going on here beyond using a FormData thing-y to store form data because it will take care of all the annoying details of actually sending the file across the wire.

function upload_do_upload(file, pending_id){

console.log("do upload for " + pending_id);

var data = new FormData();

data.append('photo', file);

These are the usual success and error handlers for the upload request. The relevant bits are where we remove stuff from the localForage cache depending on the state of things.

var on_success = function(rsp){

console.log(rsp);

var processing_key = "upload_" + pending_id;

var upload_key = "upload_" + pending_id;

localforage.removeItem(upload_key, function(rsp){

console.log("removed " + upload_key);

localforage.removeItem(processing_key, function(rsp){

console.log("removed " + processing_key);

});

});

};

var on_error = function(rsp){

console.log(rsp);

var processing_key = "processing_" + pending_id;

localforage.removeItem(processing_key, function(rsp){

console.log("removed " + processing_key);

});

};

Now we send the file to the server. The details of this part, including any authorization or authentication tokens that should appended the form, are left as exercise for the reader. Note the use of the jQuery ajax() function. Again, there's nothing jQuery-specific about any of this. It's just what I use because it's convenient.

$.ajax({

url: 'http://upload.example.com/', // adjust to taste

type: "POST",

data: data,

cache: false,

contentType: false,

processData: false,

dataType: "json",

success: on_success,

error: on_error,

});

return false;

}

It's all still early days and I've not done any real stress-testing of this out in the wild but it suggests that it's possible to build a working on-and-offline network-enabled camera as a plain-vanilla web application. The offline part is still a few steps away, in reality, because of genuinely boring and finnicky details that have to do with security and caching but it feels like a problem that can solved for with a little bit of patience.

I find this exciting. Update: Meanwhile, this... So this eventually got fixed in iOS 8.0.2 but I am going to leave the screenshot for the record...

This blog post is full of links.

#upload