Presenting the SkyTerrace Interactive Map at NACIS 2022

This was originally published on the Mills Field weblog, in November, 2022.

These are the slides and notes for the presentation I did at the 2022 North American Cartographic Information Society (NACIS) conference in Minneapolis. The talk, titled Interactive Maps of SFO from 1930 to 2022 (and beyond) at T2 SkyTerrace

, discusses the technical requirements, constraints and decisions made building the interactive map application on display in the SkyTerrace Observation Deck in Terminal 2.

Hi, my name is Aaron. I work at the San Francisco International Airport Museum. The airport is owned and operated by the City and County of San Francisco. In 1980 it created an arts program which eventually became the museum. At the moment there are 25 active galleries located throughout the terminals and since its founding the museum has produced over 1,800 exhibitions. The museum also has a permanent collection of 150,000 objects related to the history of the airport and commercial aviation. We have an amazing collection of airsickness bags.

If you didn't know this, or were unaware of the scope of the museum's work, don't worry. Most people don't and part of my job, as I see it, is to use the internet (and a lot of maps) to help fix this problem.

I started writing my notes for this talk, in long form, last week and noticed that I had written 2,500 words before reaching the halfway point. So, this is not going to be that talk. I am going to skip from peak to peak dipping in to the details only when it seems necessary. Which means there may be places during this talk where you are left wondering But, what about...

If you do I encourage you to come find me after the talk and I will be happy to answer your questions.

In 2018 we made a web application, hosted on the museum's Mills Field website, of old aerial imagery of SFO. It's a pretty standard Leaflet-based web application with imagery from as early as 1930 all the way up to 2022 sourced from the museum's collection, the airport's GIS department and the special collections library at UC Santa Barbara.

Fun fact: SFO has its own map projection. (It has two, actually.)

Our short-term goal is to eventually have imagery for every year since 1927 in order to explain how the airport has grown and changed. Our long-term goal is to do this for every other airport that SFO holds hands with.

Another fun fact: There is only one structure from 1956 still standing at SFO: The arrivals and departures concourse at Terminal 2 which is, coincidentally, where the SkyTerrace observation deck is located. At the end of 2018 I was asked whether we could to turn that web application in to a touch-based interactive installation to be included in that same SkyTerrace Observation Deck set to open in February of 2020.

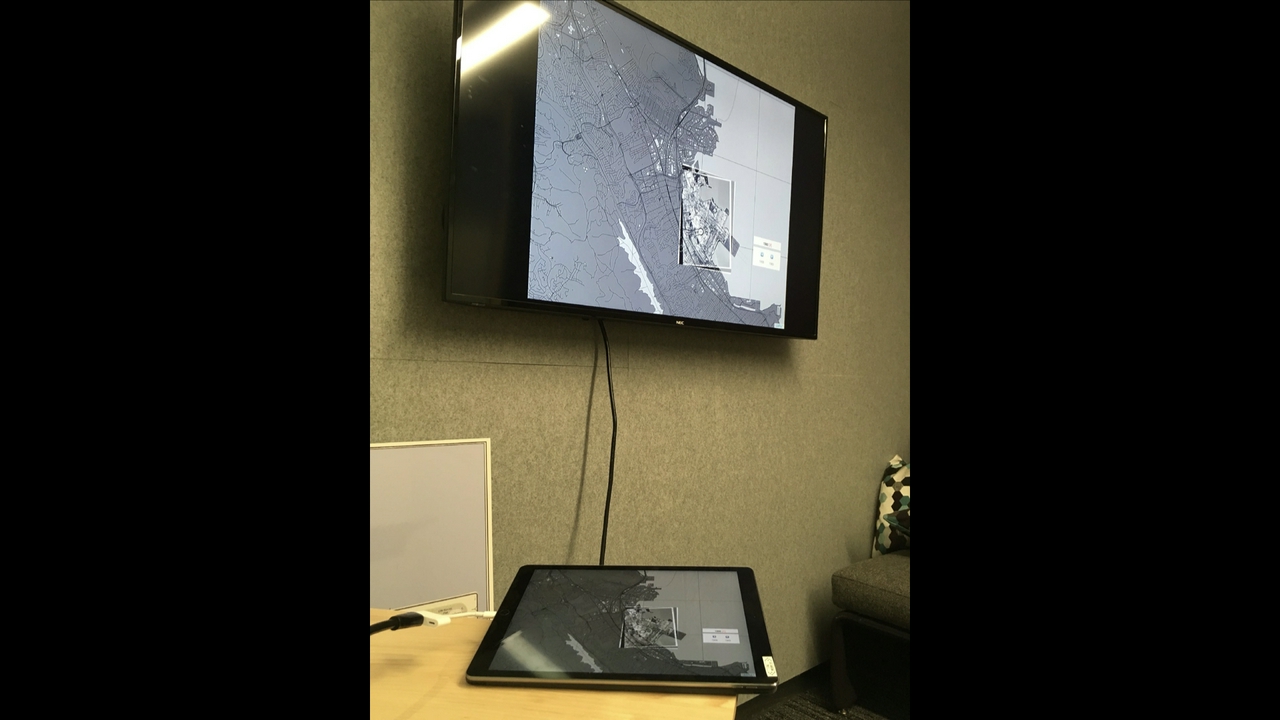

This is a photo of some early work done to imagine what the interface for that application would look like, when we were thinking that we might use a single, large touch-enabled monitor mounted to the wall. Had we done that however Americans With Disabilities Act (ADA) requirements would have meant that only the bottom third of the display was available for use as an interactive surface. The remaining two-thirds of the screen, including the arrows in this example, would have been out of reach of people in wheelchairs.

This image shows the SFO of 2019 overlayed on top the SFO of 1946. Do you see that angled terminal at the bottom of the screen? That was demolished shortly after this photo was taken to finish construction on the new Harvey Milk Terminal 1 building and is a good illustration of why we are so keen to document, and map, the history of the airport.

Two more fun facts: One, SFO Museum maintains an openly licensed catalog of historical architectural footprints of the airport but we don't have time to talk about that today. Two, the old non-existent Terminal 1 building is still on Google Maps in 2022.

One thing worth mentioning is that the airport already had a history of hiring outside firms to design and develop these kinds of digital, interactive installations in the past. Those experiences haven't always end well so when I started thinking about how to transition the work we'd done online to the physical world I was keenly aware that people were wary whether any of this would work. With that in mind the first part of the design process for developing an interactive map was to answer four questions:

What is the least expensive hardware configuration that guarantees responsiveness and durability ?

Use an iPad as a single-person controller device connected to a consumer-grade display that can be seen by multiple people in the SkyTerrace.

On the face of it iPads are not the cheapest devices on the market today. But when you factor in the quality and responsiveness of touch support in iOS, the durability of the screens, the processing power and available storage and the overall stability of long-running applications and then compare the iPad to other consumer and other vendor offerings the price no longer seems so bad. Importantly it is a fixed price and there are reasonable assurances that the same amount of money will buy better hardware, or that less money will buy equivalent hardware, in the future.

What is the fastest way to develop, fix and deploy the software that runs the application?

Develop a web application, using an open-source tool like Cordova or Electron that can be compiled as an iOS application, because it is easy to debug and ensures portability.

Developing native applications for either of the two major platforms, iOS and Android, has often been described as death by a thousand cuts. It is a time-consuming process and often even minor updates are time-consuming and labourious. On the other hand SFO Museum already had an existing web application so why not re-use that? Even though there would inevitably be changes to accomodate an interactive kiosk the core of the application would remain the same. Importantly developing, and debugging, web applications is easier and faster than native applications. Wrapping that application in a framework like Cordova or Electron would allow us to target not just iOS but Android devices as well which would be an important buffer against vendor lock-in.

How to address security concerns about a network-enabled device installed in a public area at an airport?

Bundle all the map data on the iPad and run the application offline, disabling all network interfaces.

The decision to make the application work offline was a pragmatic one. Any device and especially a network-enabled device deployed on the airport campus, particularly in public areas, is subject to rigorous scrutiny. That scrutiny, though warranted, demands time and resources from other departments which means everything takes longer to do because it is work that is scheduled and prioritized relative to all the other things happening at a busy airport. Rather than fetching all the map tiles necessary to display the historic aerial imagery over the network we simply bundled those tiles on, and read them from, the iPad itself.

What is the fastest (and least costly) way to recover from a catastrophic failure?

Just go to the Apple Store and buy another iPad.

I mentioned that the airport and the museum are owned and operated by the City and County of San Francisco. As such we can't just go to the Apple Store and buy another iPad

because we have rules and processes for spending that kind of money. But it points to the idea of ensuring that the time to recovery

when a catastrophic failure occurs (because it will) is as short as possible. If necessary it would be easier and faster to find a way to authorize a one-time purchase of a consumer device, available at a variety of outlets within driving range of the airport, than to deal with creating a purchase order for a vendor and then waiting for it to be fulfilled.

So that's what we did. And everything seemed to be going according to plan until we realized that we couldn't use either Electron or Cordova to package our web application. The earliest versions of the application had been simply been mirroring the iPad to the external display but it was decided that we did not want to show the interface elements used to control the map on the external display.

At the time, I was surprised to discover that the only way to show different content on two screens from a single application was to use native iOS frameworks. This might still be the case. At this point it felt like there was a real risk that we would get dragged in to the quicksand of native application development.

Fortunately native mobile application frameworks make it possible to embed and use a web application as a first class interface element. Importantly, in iOS, it is also possible to relay messages between the web application and the native parent application using JavaScript.

What this meant is that we could continue to use the original web application with some minor changes to send Leaflet map events from the web application back up to its parent iOS application which would then transit them down to the equivalent web application running on the external display.

We made a bucket brigade to relay and synchronize Leaflet map events, rather than touch events, between two different web applications. That's kind of the whole trick but it's a good one and when you realize that it's really an interface for exposing native iOS functionality to embedded web applications it opens up a world of possibilities.

And it worked. Importantly, we had also created a generic framework for developing web applications that could, with predictable and manageable costs, run offline and be deployed to any 4x4 foot space at the airport. When I talk about costs I'm not talking about the five or six figure budgets that external agencies might charge for something like this. I'm talking low four figures plus the cost of application development and building furniture to house the installation.

I mentioned that the SkyTerrace was set to open in February 2020 but that was eventually pushed back to later in the year. And then COVID-19 happened. Passenger traffic cratered, everyone started working from home and, not surprisingly, projects like the SkyTerrace were put on hold. The satellites were working, though, so SFO still got aerial imagery that year and this is what the airport looked in July, 2020.

The good news is that, when the airport and the museum began to resume on-site operations in earnest, the application I'd developed had been running unattended and attached to a big honking monitor for 18 months and everything still worked. Go me!

The bad news was that no one wanted to touch any kind of public surface anymore. Whether or not that concern was justified was irrelevant so far as the airport was concerned. We meet the passengers where they are, so to speak, and I was asked: Could the installation be controlled by an individual passenger's phone or mobile device instead of their finger? Oh, and also, the SkyTerrace was going to open in a month or so.

My first concern was figuring out how to rewrite as little of the original application as possible. There simply wasn't time for anything else. Do you remember the way I mentioned that the original touch-based application was relaying Leaflet events to iOS using the JavaScript bridge? It turns out the crux of the problem of, as well as the solution to, transitioning things to an application controlled by a person's phone was simply replacing that bridge with a network-based equivalent.

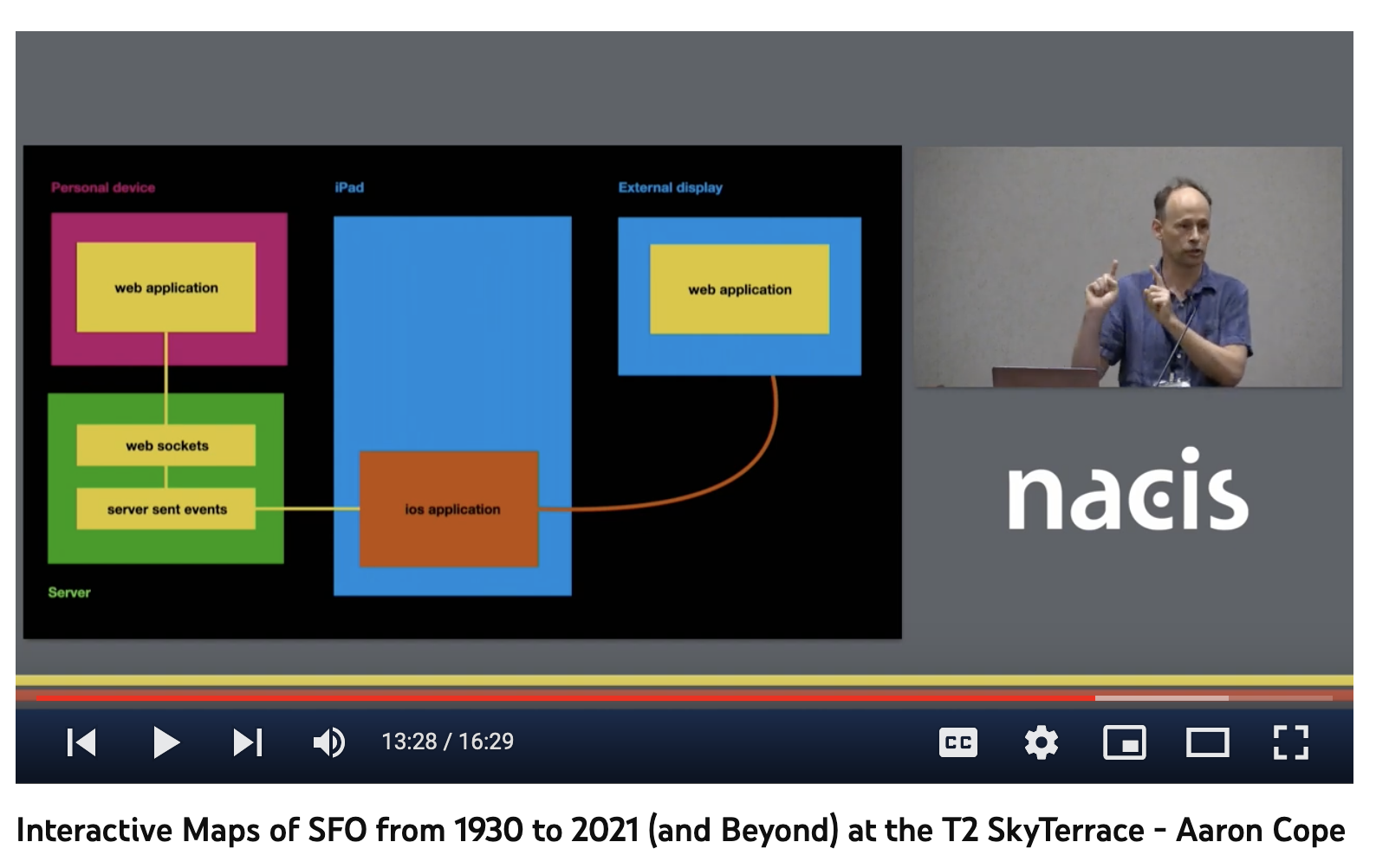

This is the original design sketch I made when I started thinking about the problem.

As a reminder, this was the original design for how messages were brokered between the iPad and the external display.

This was the updated design. In order to accomplish this we would need to run a web server that performed two functions.

On the one hand it would serve the same map application we'd already developed, but to a passenger's phone. It would capture the Leaflet map events as usual and send them back to the server using WebSockets, a web standard for bi-directional communication between parties (in this case the server and a person's browser).

On the other hand the server would parrot those same events back to the iPad using ServerSent Events, another web standard for unidirectional communications, which would then pass the messages to the application running on the external display as it always had.

This was the same bucket brigade sending events to the application running on the external display but with a few more participants. Likewise none of the hardware changed. We just hid the iPad but it was still there connected to the external display and doing all the heavy lifting.

Control of the map is goverened by passengers scanning a QR code which is displayed on the external display. This contains a URL and time-sensitive access token which, when scanned, causes the QR code to disappear, and grants control of the map to that passenger for five minutes. After five minutes a new QR code is displayed which allows another passenger to assume control but the old access token will continue to work until then.

All of which introduced a host of new software, hardware, network and security dependencies but in September 2021 the SkyTerrace observation deck opened to the public along with a working hands-free interactive map application.

And, amazingly, everything worked fine. At least until iOS 15 was released.

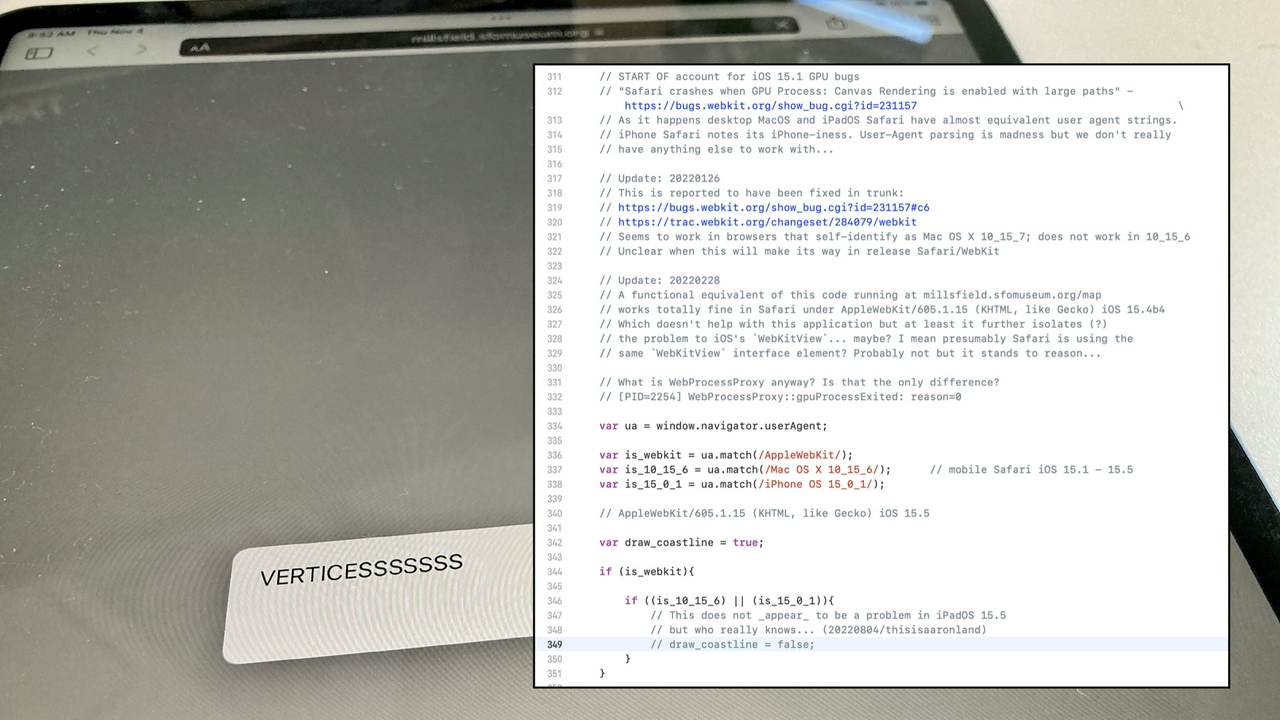

You may not remember but it was eventually determined that, for security reasons, iOS 14 is basically unsafe at any speed. I was not going to be the guy who left unpatched hardware with known security issues on the floor in the airport so I diligently updated the iPad running the interactive installation. Whereupon the application was no longer able to render the San Francisco Bay Area coastline, an important graphic element we use to contextualize the map.

Worst of all, we actually knew about this problem before we updated the iPad. The specific problem is iOS 15's inability, in web applications, to render geometries with more than a certain number of vertices. We were aware of the issue throughout the beta releases of iOS 15 but hoped it would be remedied with the final release. It was not and would not be fixed until iOS 16. I mention this to highlight what I still see as the constant high-wire act that developing native applications for mobile platforms continues to be.

In the end we were able to work around the iOS 15 problem using Brandon Liu's Protomaps. I don't have time to get in to that here so I will just say that I think Protomaps is the most exciting thing to happen in digital mapping in the last ten years.

Throughout these changes I have made sure to keep the old application in place such that the installation can be operated using a touch-based or a touch-less model with the flip of a switch. I recognize that people, present company included, are still wary of touching public surfaces but I also understand that those concerns are probably unwarranted given what we know after living with COVID-19 for nearly three years.

My own feeling is that an application you can control from your phone, without having to install any additional software, is kind of cool both as a user and a developer but that the original touch-based interface was both more inviting and more rewarding. It is unclear whether we will ever go back, though, so it's good to know we can develop these kinds of applications either way.

Finally, I like to believe there is always a larger meta-project in any given endeavour.

For me that has meant doing the extra work to share not the specific implementation of any one SFO Museum initiative but to decouple the underlying tools and software components that might be repurposed in other like-projects. The cultural heritage sector, and more broadly anything that might be dubbed the public sector, does not enjoy the capital resources or the head count of the private sector and, at least for the foreseeable future, never will.

No one else is going to save us and one of things we need, in order to meet the public's expectations in a world of digital and networked technologies, is a better kit of tools. Not the big, everything-but-the-kitchen-sink initiatives of the past but the all the different kinds of hammers, screwdrivers, chisels and other tools that we might use to fashion specific solutions tailored to our individual needs.

In November, 2020 we released a multiscreen starter kit

package to demonstrate the basic bucket brigade message-relaying iOS application I've been describing. In a week or two we will release an updated version of that package demonstrating how to set up a network-enabled bucket brigade, along with an equivalent starter kit for the server component, so that you might develop your own touch-free

applications.

Thank you.

As mentioned at the end of the talk we have published open source reference implementations (or starter kits

) for both the updated iOS application and the relay server. These applications are not the map application I presented at NACIS. They are deliberately simple and minimalist example applications to demonstrate how to dispatch messages between a variety of sources: A mobile device, a server, an iOS application and finally multiple views

in that application. What messages you send and what your application does with them will be specific to your needs.

- https://github.com/sfomuseum/ios-multiscreen-starter/tree/main#hands-free

- https://github.com/sfomuseum/www-multiscreen-starter

Meanwhile, in the time it's taken to publish this blog a couple things have happened. First, NACIS has posted videos of the conference so you can also watch video of this talk if you'd like. Second, Richard Crowley posted an interesting Twitter thread about the design of internal tools that were built when he worked at Slack.

I insisted that we design our operational tools as compositions of smaller tools so that they could be broken down and recombined in novel ways during incidents.

This passage, in particular, struck a cord because it is a good articulation of the argument I was trying to make about a kit of tools

at the end of my talk and, more generally, about fostering a practice of developing small focused tools in the cultural heritage sector.

This blog post is full of links.

#nacis