so that it may be remembered

Towards the end of the everyone gets a wunderkammer! blog post I wrote:

Code that can support any collection regardless of whether or not they have a publicly available API ... makes possible a few other things, but I will save that for a future blog post.

Here are five short videos to illustrate what I was talking about.

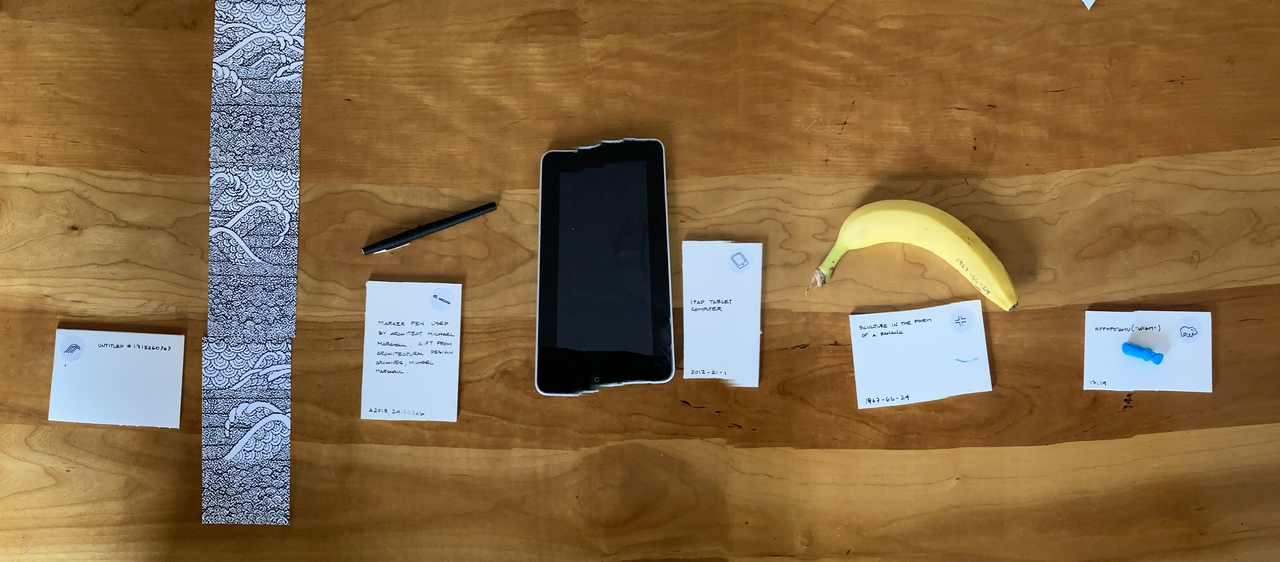

The first video shows the wunderkammer application reading a custom-made NFC tag for a first generation iPad which is part of the collection of the Smithsonian Cooper Hewitt National Design Museum. The application reads the tag and then uses the Cooper Hewitt API to look up information for that object.

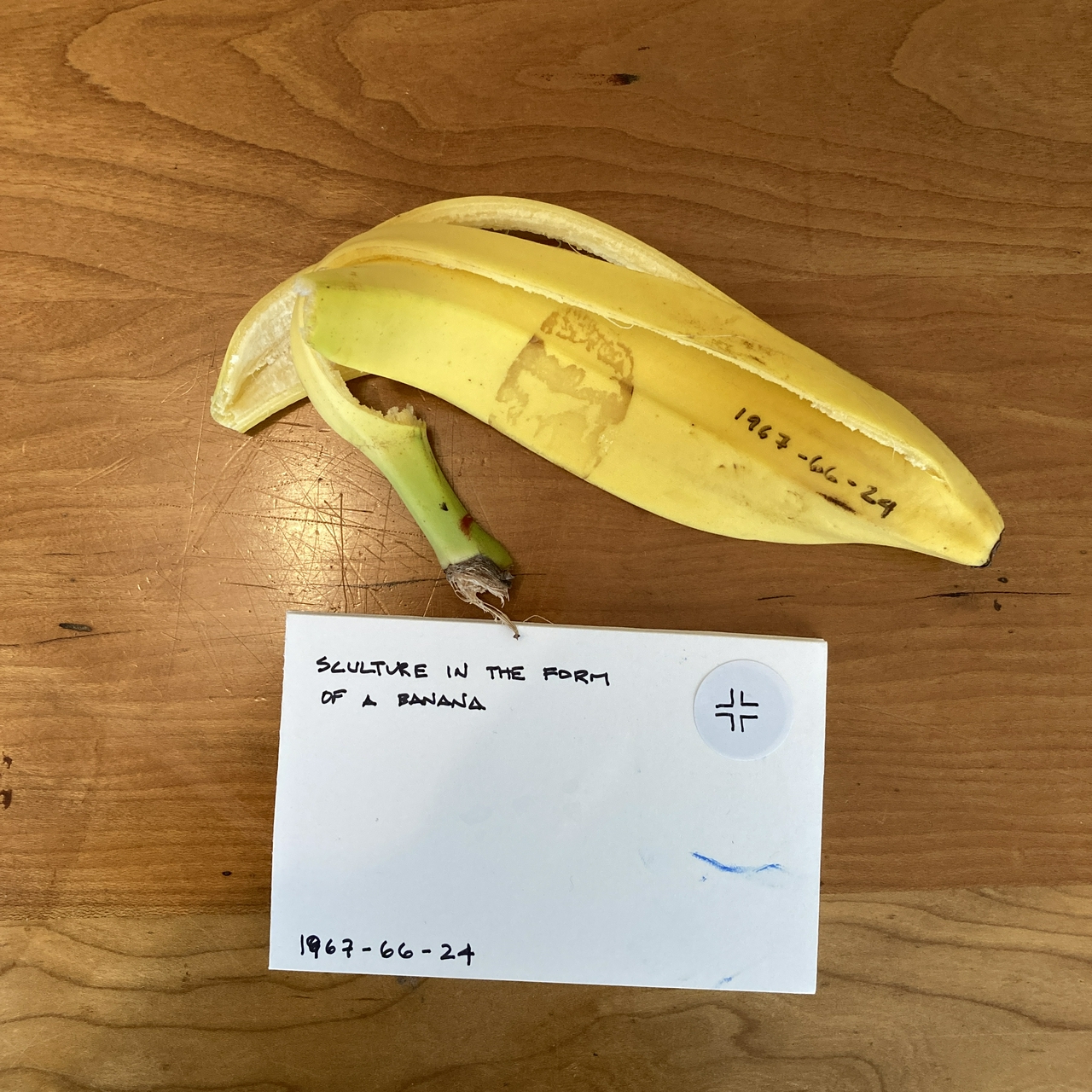

The second video depicts the same thing but for a different object in the collection, a sculpture in the form of a banana. This video is a bit superfluous but I discovered the object spelunking through the Cooper Hewitt collection using the wunderkammer application this morning so I decided to include it.

The third video is for an object in the Smithsonian National Museum of African American History and Culture (NMAAHC). It's a pen that belonged to the architect Michael Marshall. NMAAHC doesn't have any sort of public API for looking up objects. In this instance the wunderkammer application is looking up the ID in the NFC tag (si://nmaahc/o/A2018_24_1_1_1ab) from a local database produced from the Smithsonian Open Access dataset. The object's image is being retrieved from the Smithsonian's web servers but all the other information is local to device that the wunderkammer application runs on.

This is not really a sculpture of William the hippopotamus. I grew up with a William made of cloth and stuffing and would have used that for the video if I could find it. Instead, I made a quick approximation out of children's polymer clay.

The fourth video is for an object in the Metropolitan Museum of Art. It is an Egyptian sculpture of a hippopotamus colloquially known as William

. Like the pen in the NMAAHC collection all of the information about this object, save for the image, is local to the device. The local data was produced using the Metropolitan Museum of Art Open Access Initiative dataset and two Go packages for creating a wunderkammer compatible database from those objects, go-metmuseum-openaccess and go-wunderkammer.

Here's an example of that data being filtered for objects marked as public domain and transformed in to OEmbed records. The output is sent directly to a tool called wunderkammer-db that produces a SQLite database used by the wunderkammer application.

$> sqlite3 metmuseum.db < schema/sqlite/oembed.sqlite $> /usr/local/go-metmuseum-openaccess/bin/emit \ -oembed \ -oembed-ensure-images \ -with-images \ -bucket-uri file:///usr/local/openaccess \ -images-bucket-uri file:///usr/local/go-metmuseum-openaccess/data \ | bin/wunderkammer-db -database-dsn sql:///usr/local/go-wunderkammer/metmuseum.db $ sqlite3 metmuseum.db SQLite version 3.32.1 2020-05-25 16:19:56 Enter ".help" for usage hints. sqlite> SELECT COUNT(url) FROM oembed; 236288

The fifth video is for one of my own Or This drawings, one that I made about a week ago. Like the Smithsonian and Metropolitan Museum of Art datasets the wunderkammer application is retrieving all its data from a local orthis

SQLite database. What's different about this video from the others is that the image is also being retrieved from the local database. If you look carefully you'll see that the phone has been disconnected from both the cellular and wireless networks.

The image data is being bundled in a new, and non-standard, OEmbed property called data_url which is a base64 encoded data URL of the image. When the wunderkammer saves an object locally it also saves a copy of the image and this new property is where that data is stored. This is part of the ongoing work to settle on the simplest and dumbest common format for working with collection data. In the last blog post I spoke about how all the collections in the wunderkammer application, including the wunderkammer's own local database of saved objects, conform to a common public Collection interface. That Collection interface in turn expects to work with a common, but modified, version of the OEmbed photo data structure.

Here's the Go language definition for that modified data structure with the additional elements that the wunderkammer application uses:

package oembed

type Photo struct {

Version string `json:"version,xml:"version""`

Type string `json:"type"`

Width int `json:"width"`

Height int `json:"height"`

Title string `json:"title"`

URL string `json:"url"`

AuthorName string `json:"author_name"`

AuthorURL string `json:"author_url"`

ProviderName string `json:"provider_name"`

ProviderURL string `json:"provider_url"`

ObjectURI string `json:"object_uri"`

DataURL string `json:"data_url,omitempty"`

}

Things are still in flux but I think they are close to being done. It contains an optional data_url property and an obligatory object_uri property. Here are some examples of object URIs each unique to a different collection:

- In the case of the Cooper Hewitt the object_uri is

chsdm:o:{OBJECT_ID}. - In the case of the Smithsonian it is

si://{SMITHSONIAN_COLLECTION}/o/{NORMALIZED_EDAN_ID}. - In the case of the Metropolitan Museum of Art it is

metmuseum://o/{OPENACCESS_ID}. - In the case of the Or This drawings it is

aa://orthis/{DRAWING_ID}.

The last three examples are all well-formed URIs with scheme, host and path elements. In the case of the Smithsonian and the Met I made some arbitrary choices since they haven't published their own schemes for NFC tags yet. They all differ from the Cooper Hewitt NFC tag format which is a list of colon-separated elements. That was a decision made way back in 2014 to account for the fact that NFC tags have a maximum capacity of of 144 bytes.

It would have been, and would still be, nice to include a fully qualified address on the web in an NFC tag (https://collection.cooperhewitt.org/objects/18458677/ instead of chsdm:o:18458677). We already knew then that tags would need to accomodate wall labels with multiple objects so we opted for semantics that favoured brevity while trying to limit ambiguity. For example chsdm:o:{OBJECT_ID},{OBJECT_ID},{OBJECT_ID} instead of c:o... where it's not readily obvious what

means.c

In the case of the wunderkammer application the only requirement is that an NFC tag contain a value which can be parsed as a RFC 6570 URI Template. Interestingly, for the museum sector, this decision means that most accession numbers can't be used in tag URIs since the

symbol is a reserved character in URI templates..

In this model the object_uri becomes the primary key for the object but not for SQLite databases that the wunderkammer application works with. The primary key remains the OEmbed (photo) url property which is one of many possible representations of an object. Here's what the database schema for these databases looks like:

CREATE TABLE oembed (

url TEXT PRIMARY KEY,

object_uri TEXT,

body TEXT

);

CREATE INDEX `by_object` ON oembed (`object_uri`);

For example, there are three images of the pen owned by Michael Marshall in the NMAAHC collection. There are 16 images of sneakers worn by Julius Dr. J

Erving. In fact there are generally many different representations, and kinds of representations, associated with any given object so this feels like a good way to prepare for the wunderkammer application to accomodate them going forward.

As I write this the wunderkammer application only shows the first image for a given object when its NFC tag has been scanned. Thinking about how to display multiple images when a tag is scanned and whether to save all of them when a person collects an object will be the next focus of work since it coincides nicely with the code to display locally saved objects.

In the first blog post about all of the work that has led to the wunderkammer application I wrote:

The phrase "NFC tag" should be understood to mean "any equivalent technology". Everything I've described could be implemented using QR codes and camera-based applications. QR codes introduce their own aesthetic and design challenges given the limited space on museum wall labels but when weighed against the cost and complexity of deploying embedded NFC tags they might be a better choice for some museums.

What I've been describing doesn't need to be done with NFC tags. It could also be done with QR codes, by photographing wall labels and using OCR software to extract accession numbers or even by using image detection algorithms to identify a given object in a photo. All of these are just different ways of resolving a thing that a person is looking at to an identifier that can be found in a database so that it may be remembered.

scultureis either. My only excuse is that it was still early in the morning when I put this together and hadn't completely woken up yet.

It's also really important to understand that I haven't done anything novel with NFC tags. Everything described in these blog posts has been possible to do with Android devices since 2014, before the Cooper Hewitt Pen even launched.

In 2020 you can get a decent NFC-enabled Android phone for a hundred dollars and probably less if you bought in volume. With a little bit of effort those devices could be configured to have a kill switch

if and when they left the museum property, similar to one Apple uses to prevent theft in their stores. This would go a long way towards mitigating the need to ask visitors for their drivers license or credit card when handing out the devices. The lesson of both The O at the Museum of Old and New Art and The Pen at Cooper Hewitt is that if you want to ensure adoption for a given tool then you make it freely available without asking for anything in return.

Android devices even have public APIs for developers to use host card emulation (HCE) which allows the device to be programmed to act as though it were an NFC tag. This is widely understood to be how Apple Pay works, for example, but it is functionality only available to applications that Apple controls. HCE in a museum context offers a number of interesting opportunities. I probably wouldn't recommend installing a fleet of Android phones in the walls and gallery cases of a museum but, for a unit cost of 100$, there are a number of places where a tiny computer with a screen, an internet connection, sophisiticated graphics and rendering capabilities and the ability to programatically broadcast itself (HCE) would be ripe with possibility.

The story as I heard it was that, sometime before my arrival at Cooper Hewitt, the museum was working on an ad campaign with posters and stickers depicting an arrow and a word balloon that said This is design

. The idea was they would be placed all over New York City pointing at the kinds of things that people might not think were design. Things that people might not associate with a design museum. We thought about this a lot while we were bringing the Pen to life because, setting aside the reality of not being able to let people take the Pens home with them, those word balloons would have been an obvious place to put NFC tags. The thinking was that you could walk around the city and continue collecting things just like you did in the museum.

I mention this because it's the first thing I thought of when Apple announced their upcoming App Clip technology last month. Here's an except from the description for the Explore App Clips developer session:

We'll explain how to design and build an app clip — a small part of your app that focuses on a specific task — and make it easily discoverable. Learn how to focus your app clip on short and fast interactions and identify contextually-relevant situations where you can surface it, like a search in Maps or at a real-world location through QR codes, NFC, or app clip codes.

Essentially, App Clips are trimmed down applications that your phone downloads and runs, on-demand, when you scan a URL. The emphasis is on the trimmed down

part. App Clips don't expose all the functionality of an application but rather select interactions. Most of the examples I've seen seem to involve making it easier for people to spend money but if you step back from that it's pretty easy to see how App Clips are basically the Pen: Scan a tag, save it your account, go back to whatever your were doing before.

The phrase applications that your phone downloads and runs, on-demand, when you scan a URL

should fill you with dread. It's a scenario that is primed for abuse. As such App Clips don't allow arbitrary URLs to invoke arbitrary applications. Both the App Clips and their URLs need to be registered with and delegated by Apple services and those URLs need to have a corresponding presence on the web that you also control: https://collection.cooperhewitt.org/objects/18458677/ instead of chsdm:o:18458677.

I have mixed feelings about App Clips, specifically the part where Apple acts as a gatekeeper for every interaction. There are legitimate security reasons for doing things this way but it smells like the kind of technological quicksand that is difficult or impossible to escape once you've taken the plunge.

From the perspective of the wunderkammer application it means the only way a common wunderkammer style application, spanning multiple collections and institutions, would work with App Clips is if there was only a single wunderkammer application under the control of one individual or group.

It would need to be a single organization that controlled a common wunderkammer domain and namespace, so either a large and often problematic consortium like ArtStor or the for-profit client-services companies that pay for lunches and drinks at museum conferences. Sadly, there rarely seems to be anything in between those two options.

In the original bring your own pen device blog post I wrote:

I built [the wunderkammer] application because I wanted to prove that it is within the means of cultural heritage institutions to do what we did with the Pen, at Cooper Hewitt, sector-wide.

In the second everyone gets a wunderkammer! blog post I wrote:

It is a tool I am building because it's a tool that I want and it helps me to prove, and disprove, some larger ideas about how the cultural heritage sector makes its collections available beyond "the museum visit" ... and offered to the cultural heritage sector in a spirit of generousity.

At the same time I don't really believe that the cultural heritage sector will do anything with this work until it is repackaged and resold back to the sector as a new

offering by a third-party vendor. It's pretty obvious that something which replicates the functionality of the Pen independent of its form is something that museum customers

want. That's not a comment about the wunderkammer application specifically but about most of the things the sector tries to do by and for itself. That's also a big subject, full of nuance and complexity, so I will try to write about it more in future blog posts.

Until then I will continue working to make the wunderkammer better one tiny feature at a time.

This blog post is full of links.

#remembered