everyone gets a wunderkammer!

There have been a handful of significant updates to the wunderkammer application I talked about in the bring your own pen device

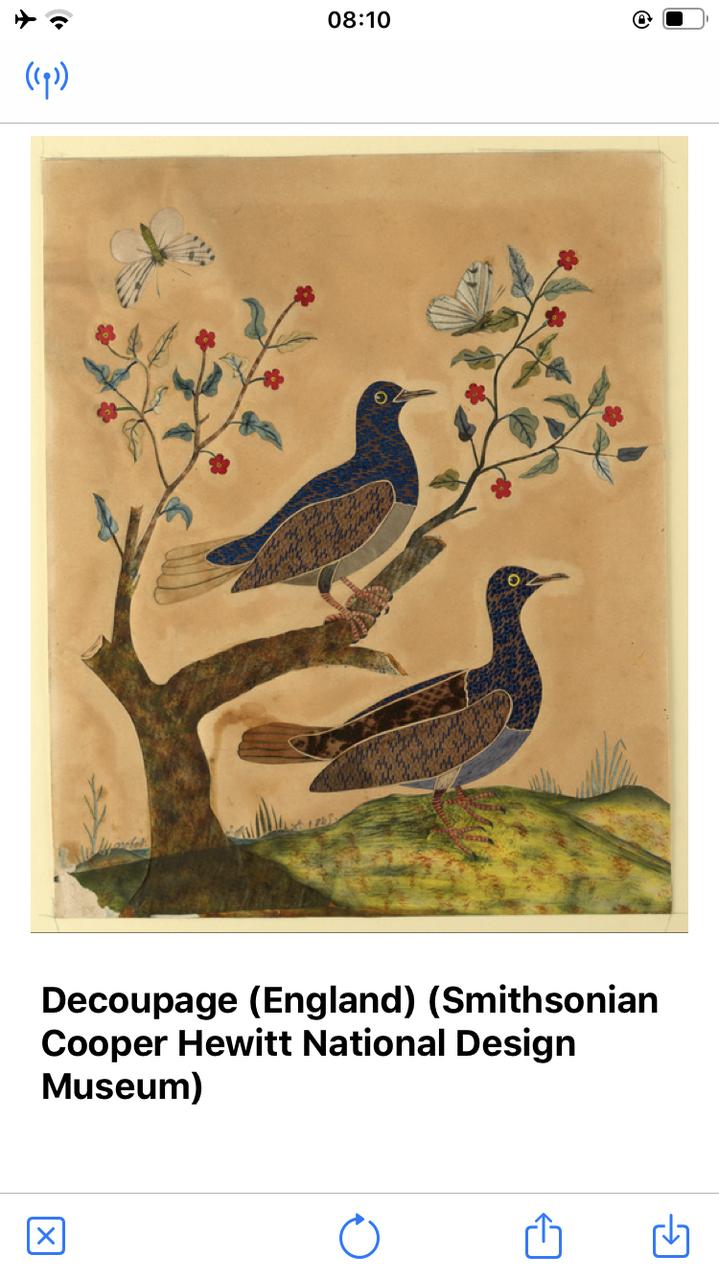

blog post. The application now has the ability to work with multiple collections, specifically SFO Museum and the whole of the Smithsonian. Clicking on an image will open the web page for that object and there is support for the operating system's share

option to send an object's URL to another person or application.

The latest releast also introduces the notion of capabilities

for each collection. Some collections, like the Cooper Hewitt support NFC tag scanning and querying for random objects while some, like SFO Museum and the Smithsonian, only support the latter. Some collections (Cooper Hewitt) have fully fledged API endpoints for retrieving random objects. Some collections (SFO Museum) have a simpler and less-sophisticated oEmbed endpoint for retrieving random objects. Some collections (Smithsonian) have neither but because the wunderkammer application bundles all of the Smithsonian collection data locally it's able to query for random items itself.

In fact the wunderkammer application's own database is itself modeled as collection

. It doesn't have the capability

to scan NFC tags or query for random objects (yet) but it does have the ability to save collection objects.

Collections are described using a Swift language protocol definition that looks like this:

public protocol Collection {

func GetRandomURL(completion: @escaping (Result<URL, Error>) -> ())

func SaveObject(object: CollectionObject) -> Result<CollectionObjectSaveResponse, Error>

func GetOEmbed(url: URL) -> Result<CollectionOEmbed, Error>

func HasCapability(capability: CollectionCapabilities) -> Result<Bool, Error>

func NFCTagTemplate() -> Result<URITemplate, Error>

func ObjectURLTemplate() -> Result<URITemplate, Error>

func OEmbedURLTemplate() -> Result<URITemplate, Error>

}

Each collection implements that protocol according to its capabilities and specific requirements but they all present a uniform interface for communicating with the wunderkammer application. There is a corresponding CollectionOEmbed protocol that looks like this:

public protocol CollectionOEmbed {

func ObjectID() -> String

func ObjectURL() -> String

func ObjectTitle() -> String

func Collection() -> String

func ImageURL() -> String

func Raw() -> OEmbedResponse

}

There is still a need for an abstract collection-specific oEmbed protocol because some of the necessary attributes, for the purposes of a wunderkammer-style application, aren't defined in the oEmbed specification and to account for the different ways that different collections return that data. To date the work on the wunderkammer application has used oEmbed as the storage and retrieval protocol for both objects which may have multiple representations and each one of those atomic representations that depict the same object.

The database models, as they are currently defined, don't necessarily account for or allow all the different ways people may want to do things but I've been modeling things using oEmbed precisely because it is so simple to extend and reshape.

In the first blog post about the wunderkammer application I wrote:

Providing an oEmbed endpointis not a zero-cost proposition but, setting aside the technical details for another post, I can say with confidence it's not very hard or expensive either. If a museum has ever put together a spreadsheet for ingesting their collection in to the Google Art Project, for example, they are about 75% of the way towards making it possible. I may build a tool to deal with the other 25% soon but anyone on any of the digital teams in the cultural heritage sector could do the same. Someone should build that tool and it should be made broadly available to the sector as a common good.

I remain cautiously optimistic that the simplest and dumbest thing going forward is simply to define two bespoke oEmbed types

: collection_object and collection_image. Both would be modeled on the existing photo type with minimal additional properties to define an object URL alongside its object image URL and a suitable creditline. These are things which can be shoehorned in to the existing author and author_url properties but it might also be easiest just to agree on a handful of new key value pairs to meet the baseline requirements for showing collection objects across institutions.

This approach follows the work that SFO Museum has been doing to create the building blocks for a collection agnostic geotagging application:

oEmbed is not the only way to retrieve image and descriptive metadata information for a “resource” (for example, a collection object) on the web. There’s a similar concept in the IIIF Presentation API that talks about “manifest” files. The IIIF documentation states that:

The manifest response contains sufficient information for the client to initialize itself and begin to display something quickly to the user. The manifest resource represents a single object and any intellectual work or works embodied within that object. In particular it includes the descriptive, rights and linking information for the object. It then embeds the sequence(s) of canvases that should be rendered to the user.

Which sounds a lot like oEmbed, doesn’t it? The reason we chose to start with oEmbed rather than IIIF is that while neither is especially complicated the former was simply faster and easier to set up and deploy. This echoes the rationale we talked about in the last blog post about the lack of polish, in the short-term, for the geocoding functionality in the go-www-geotag application:

We have a basic interaction model and we understand how to account for its shortcomings while we continue to develop the rest of the application.

We plan to add support for IIIF manifests ... but it was important to start with something very simple that could be implemented by as many institutions as possible with as little overhead as possible. It’s not so much that IIIF is harder as it is that oEmbed is easier, if that makes sense.

Almost everything that the wunderkammer application does is precisely why the IIIF standards exist. There would be a real and tangible benefit in using those standards and in time we might. I also hope that there is benefit in demonstrating, by virtue of not starting with IIIF, some of the challenges in using those standards. I have a pretty good understanding of how IIIF is designed and meant to work but I also looked elsewhere, at the oEmbed specification, when it came time to try and build a working prototype. I offer that not as a lack of support for the IIIF project but as a well-intentioned critique of it.

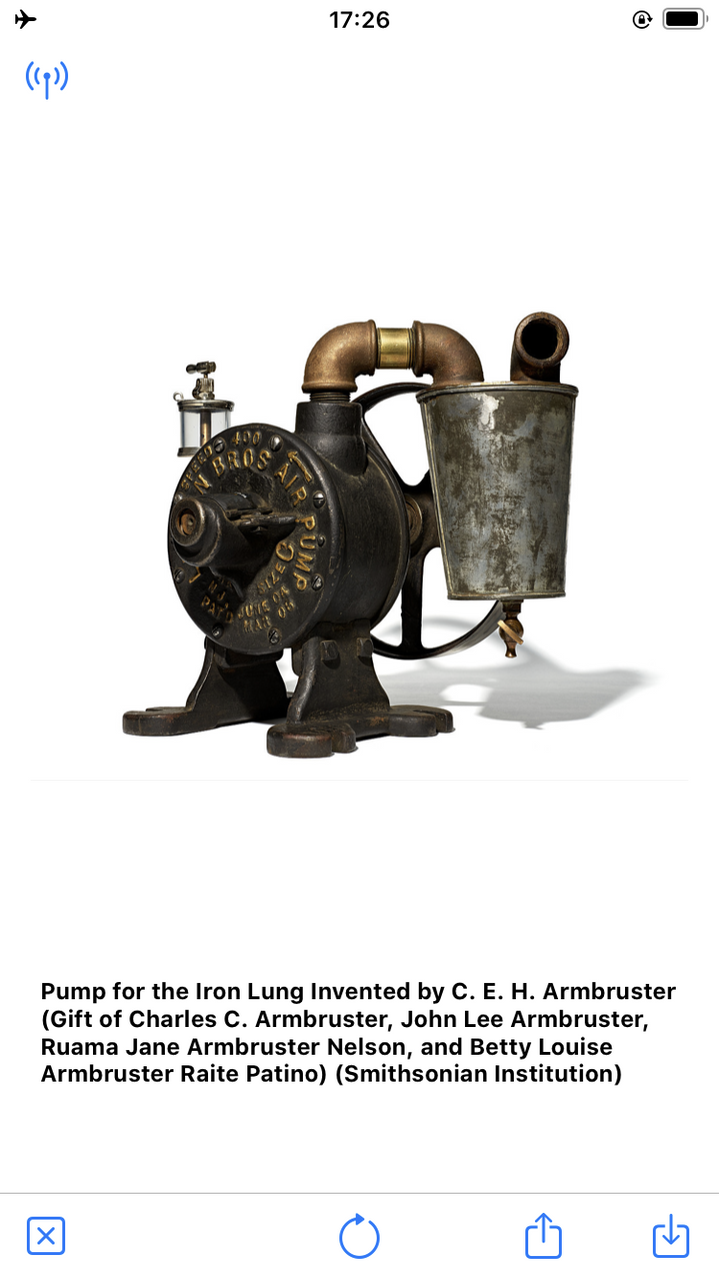

Earlier I said that the wunderkammer application bundles all of the Smithsonian collection data locally

. This is done by producing SQLite databases containing pre-generated oEmbed data from the Smithsonian Open Access Metadata Repository which contains 11 million metadata records of which there are approximately 3 million openly licensed object images.

These SQLite databases are produced using two Go language packages, go-smithsonian-openaccess for reading the Open Access data and go-smithsonian-openaccess-database for creating the oEmbed databases, and copied manually in to the application's documents folder. This last step is an inconvenience that needs to be automated, probably by downloading those databases over the internet, but that is still work for a later date.

Here is how I created a database of objects from the National Air and Space Museum:

$> cd /usr/local/go-smithsonian-openaccess-data $> sqlite3 nasm.db < schema/sqlite/oembed.sqlite $> /usr/local/go-smithsonian-openaccess/bin/emit -bucket-uri file:///usr/local/OpenAccess \ -oembed \ metadata/objects/NASM \ | bin/oembed-populate \ -database-dsn sql://sqlite3/usr/local/go-smithsonian-openaccess-database/nasm.db $> sqlite3 nasm.db sqlite> SELECT COUNT(url) FROM oembed; 2407

The wunderkammer application also supports multiple databases associated with a given collection. This was done to accomodate the Smithsonian collection which yields a 1.5GB document if all 3 million image records are bundled in to a single database file.

One notable thing about the code that handles the Smithsonian data is that it's not really specific to the Smithsonian. It is code that simply assumes everything about a collection is local to the device stored in one or more SQLite databases, with the exception being object image files that are still assumed to be published on the web. It is code that can, and will, be adapted to support any collection with enough openly licensed metadata to produce oEmbed-style records. It is code that can support any collection regardless of whether or not they have a publicly available API.

It is also code that makes possible a few other things, but I will save that for a future blog post.

The wunderkammer application itself is still very much a work in progress and not ready for general use, if only because it is not available on the App Store and requires that you build and install it manually. I don't know whether or not I will make the application on the App Store at all. It is a tool I am building because it's a tool that I want and it helps me to prove, and disprove, some larger ideas about how the cultural heritage sector makes its collections available beyond the museum visit

.

It is code that is offered to the cultural heritage sector in a spirit of generousity and if you'd like to help out there is a growing list of details to attend to in order to make the application better and more useful.

This blog post is full of links.

#wunderkammer