#2009

Somewhere over Nevada on the way to New York, the airplane wireless places me at Boston's Logan airport. The future is here, Myles, it's just not sure where that is exactly.

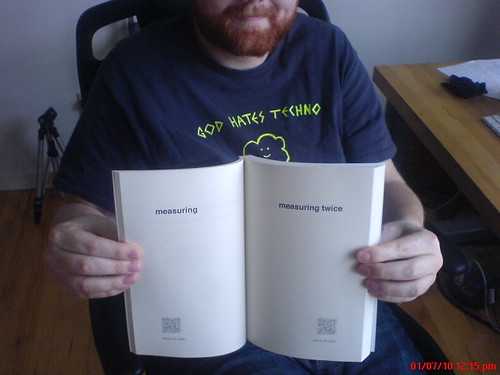

I mention that only because I made a thing, sitting in the airport in Tampa, at the beginning of the week. I made a book.

I run a cron job every day that calls the Twitter API and fetches my most recent updates and saves each one as a separate XML file to a local computer in nested YYYY/MM/DD folders. That's all it does. It's been running every day since April 2008 and, until now, I've not done anything with all those files. One reason that I run the cron job in the first place is that not backing up your stuff on third-party services is just silly. That's not meant as a judgment or a finger-pointy thing, against any particular company or cloud-castle. It's just that many copies keeps stuff safe.

So I figured I would make a book of all my Twitter messages, from 2009.

James did this last year and his book has a whale on it. At the time, he wrote: When Twitter is inevitably replaced by something else, I don’t want to lose all those incidentals, the casual asides, the remarks and responses. That’s all really. This seems like a nice way to do it...

James opted for a Proper Book layout but since I never post often enough to create anything like a normal narrative I decided to stay sparse and use big letters floating in whitespace, which is probably what the messages seem like as they are posted. (The little seredipities created by having messages placed side-by-side is just icing.) Also, aside from a personal historical record the whole thing makes for a nicer, not to mention, funnier end of year holiday letter. I've never sent holiday letters but I have sent one the books to an old friend who doesn't spend much time on the Internet and I hope it will be like getting a nice, long postcard that affords the scent of the year even if she wasn't there to see the whole thing.

Also, the thought that some day someone (probably me) might have to OCR the book back in to digital form makes me laugh.

I spent a little bit of time adding an index of all the words used over the year but eventually decided against it. Aside from the grunt work involved in generating and laying out the index itself the addition of page numbers introduced a one visual distraction too many.

Which didn't stop me from adding QR codes. Each QR

code encodes the URL of the message itself which is,

after all, a thing of the web and it seemed

wrong not to honour that. I tried to make the codes

themselves discrete (read: grey) but they can still be

read by the clunky 5-year old barcode reader

that

still ships with every Nokia phone. I'm not sure that

the bottom of the page is necessarily the best place to

put QR codes but now that we are living in a world where

people are building useful barcode readers, I figure

it's worth experimenting again.

The nuts and bolts involve a Perl script that fetches

the posts and a PHP script to turn them into a PDF

file. The Perl-y bits do not handle fetching stuff in the

past, at the moment. That would be pretty easy to do but

it involves code to deal with pagination and keeping

track that you haven't made more than 100 API calls in

the last hour and pausing until the next hour if you

have and so on. The PHP part simply takes two arguments:

the path to the directory containing all the messages

for a year and the location where your book

should be saved.

I've been using Lulu to print, so far, and if you can get past the awfulness of all their tools for creating book covers it's been pretty good.

The

code is part of the twitter-tools

project, on

GitHub. It all still needs some work to finish the

polish and packaging and maybe it warrants a complete re-write for

someone with the ambition (or just a dislike of Perl)

but, for now, it works for me. Enjoy.

This blog post is full of links.

#2009Woelr

Don't worry, Myles. I'm indexing all of these blog posts. No, really.

Whatever else may be said about the acquisition by Yahoo! there are two

things that pretty much everyone agrees were good for

Flickr: Vespa (the magic Scandawegian document index

that we (we

) use for search) and Where on Earth (or WOE, since renamed

GeoPlanet but I can never remember to call it that and the Y!Geo people always get cross with me...) which we used for all things geo-related. The nice thing about both is

that they leant themselves to being used for all kinds of stuff that they weren't capital-D

designed for.

Vespa made it possible to do proper search as we grew and grew and grew (and at a time when we were federating the databases) but it also let us add radial queries, support for machine tags — the machine tag extras and hierarchies stuff is still done using MySQL, for what it's worth — and the ability to roll up (or facet) all that data to show the top tags for a place or build API applications like Flickr For Busy People and [redacted]. Vespa is a non-trivial piece of infrastructure to maintain and it's not a magic pony but I sure do miss having it to play with.

WOE made it possible to let users geocode their photos globally instead of being limited to a US/Euro -centric subset largely determined by marketing and target demographics, to reverse-geocode (with a little help and coaxing along the way) machine-readable locations back in to human readable names and to use a unique set of identifiers for places (WOE IDs) that can be shared, in a hawt linked-data kind of way, with the rest of the Internets.

Both of these are magic shiney boxes and very much proprietary. Yahoo! did more that most by deciding to release all of the WOE IDs and names under a Creative Commons license and we tried to do our part by also releasing the shapefiles for places (neighbourhoods, localities, etc.) derived from geotagged photos, but there's nothing wrong with a business wanting to hold on to their secret sauce.

Still, I miss them.

So I finally got around to looking at Solr, a web-based wrapper for Doug Cuttings' Lucene document indexer. I don't know enough about search thingies to offer any kind of educated opinion about the differences between Vespa and Solr under the hood but conceptually Solr does most of things that I'd come to take for granted at Flickr including a brain-dead easy HTTP interface for searching and updating the index. I don't know that Solr would ever be able to index as fast as Vespa, at least not in the kind of environment we were running. There are always tricks and solutions but then again it's probably not something most people need to worry about. At least not to get started.

Facetting. Solr does it out of the box.

Here are all the places in the GeoPlanet database with the word Museum

in their name(s) facetted by country:

# /select?q=museum&facet=true&facet.field=iso&facet.mincount=1&rows=0&wt=json

facet_counts":{

"facet_queries":{},

"facet_fields":{

"de",628,

"us",474,

"in",109,

"ca",104,

"gb",80,

"nl",75,

"ch",45,

"se",44,

"au",36,

"no",30,

"at",25,

"ee",23,

"dk",21,

"il",21,

"cn",18,

"be",17,

"lv",14,

"lt",13,

"eg",11,

"cy",9,

"fr",8,

"gr",8,

"id",7,

"nz",6,

"sg",6,

"bo",5,

"cz",5,

"my",5,

"iq",4,

"is",4,

"jo",4,

"th",4,

"tr",4,

"jm",3,

"kw",3,

"ps",3,

"bh",2,

"cr",2,

"lk",2,

"pk",2,

"za",2,

"ae",1,

"al",1,

"bd",1,

"it",1,

"jp",1,

"ke",1,

"kh",1,

"lb",1,

"li",1,

"ru",1,

"sa",1]},

Which looks pretty much like it could be generated by a vanilla GROUP BY statement in a relational database. And it could. The point is not to join the chorus of voices heralding the end of SQL but rather to get excited about some of the functionality of relational databases, which frankly aren't that good at search, being added to ... search engines.

You can facet on any field that's been indexed which means that it's not without a cost but so far my experience has been that for an initial exploration of a dataset, at least, it's often easier and cheaper to update a Solr schema and refeed all the data (even from a relational database) than it is to add multiple (and always with the LEFTMOST-iness) indexes to the same database.

The other thing it can do is facet dates. That's pretty exciting if for no other reason than so-called linear searches

(so-called because I can't think of a better name) are boring and kind of pointless. Searches results ranked by a single criteria are still useful but not for everything and certainly not for a lot of stuff that the Internets have been responsible for. It's not that facetting by date (there were 100 photos of kittens uploaded yesterday, but only five the day before and 300 the month before that) is the one-true solution to all our relevancy woes but it's a really useful way to help people glean some basic understanding from a giant pile of possibilities.

And geo.

There's a lot of work being done to add spatial searching to the next major release (1.5) of Solr but in the meantime the nice folks at JTeam in the Netherlands simply read the thread on adding geo support on the Solr bug tracker and implemented the basics (radial queries) as Solr plugin. There's a little bit of fiddling that needs to happen in the Solr config files to get it working but otherwise it's about as easy as dropping a .jar file in specific folder.

Here are all the places with garden

in their name within 10 kilometers of London's Kensington Garden (which curiously isn't part of GeoPlanet):

# Hey look! See how this example sayslongand the docs on the JTeam # site saylng. That's because the docs are wrong — uselong. # Note the use of the fl parameter for the sake of brevity. # /select?q={!spatial lat=51.500152 long=-0.126236 radius=10 calc=arc unit=km}garden&fl=woeid,name,latitude,longitude <result name="response" numFound="3" start="0"> <doc> <str name="name">Covent Garden</str> <int name="woeid">17043</int> <double name="longitude">-0.124179084074</double> <double name="latitude">51.5143549184</double> </doc> <doc> <str name="name">Hatton Garden</str> <int name="woeid">20094322</int> <double name="longitude">-0.109189265226</double> <double name="latitude">51.5180002638</double> </doc> <doc> <str name="name">New Covent Garden</str> <int name="woeid">20094338</int> <double name="longitude">-0.143671513716</double> <double name="latitude">51.4805055347</double> </doc> </result>

At Flickr, we built Nearby

using a similar approach in Vespa.

This isn't going to replace PostGIS any time soon but it will allow you to filter your queries by a specific radius, using a plain old GET request, which is a Good Thing ™. There's a lesson in that which is: Even when you actually figure out how to use PostGIS all the weird setup hoops and syntax quirks required to do anything make it feel like you might as well be stabbing yourself in the face. It is a very good spatial database but it's also not very much fun. There's also the part where Solr has replication and Postgres doesn't...

So, the obvious candidate for the Solr-love would be a local copy of all your Flickr data, exported through the API. Maybe an extension to Net::Flickr::Backup that writes to Solr at the same time. I've actually started to do that but then decided to try something a bit simpler to get my feet wet: Why not import all of the GeoPlanet data set in to Solr? It's not immediately obvious why you'd want to use Solr instead of a plain old database but I figured that the ability to facet the parent and child relations and adjacencies alongside all the usual search-y bits for place names made it worth at least trying.

The data itself is exported as tab-separated files, with aliases (alternate names) and adjacencies for every WOE ID stored in separate files. This is fine except for the part where reading those files in to memory and feeding the Solr index, at the same time, is an outrageous memory hog. Instead I opted for exporting the aliases and adjacencies to a SQLite database. The actual list of places is read, line by line, using a simple Python script that pokes the temporary database per WOE ID.

Then all you need to do is this:

python import.py --solr http://localhost:8983/solr/geoplanet \ --data /path/to/geoplanet-7.4.0 \ --version 7.4.0

It takes about an hour to index the entire dataset on my laptop. Here's what London (WOE ID 44418) looks like after it's been indexed:

<doc> <arr name="adjacent_woeid"> <int>18074</int> <int>19919</int> <int>19551</int> <int>14482</int> <!-- and so on --> </arr> <arr name="alias_CHI_Q"><str>伦敦</str></arr> <arr name="alias_CHI_V"><str>倫敦</str></arr> <arr name="alias_DUT_Q"><str>Londen</str></arr> <arr name="alias_ENG_V"><str>LON</str></arr> <arr name="alias_FIN_Q"><str>Lontoo</str></arr> <arr name="alias_FIN_V"><str>Lontoon kautta</str></arr> <arr name="alias_FRE_Q"><str>Londres</str></arr> <arr name="alias_ITA_Q"><str>Londra</str></arr> <arr name="alias_JPN_Q"><str>ロンドン</str></arr> <arr name="alias_KOR_Q"><str>런던</str></arr> <arr name="alias_POR_Q"><str>Londres</str></arr> <arr name="alias_SPA_Q"><str>Londres</str></arr> <str name="iso">GB</str> <str name="lang">ENG</str> <str name="name">London</str> <int name="parent_woeid">23416974</int> <str name="placetype">Town</str> <int name="woeid">44418</int> </doc>

See all those alias_ fields? Every language+type pair (the FRE_Q part of the field name) is stored, but not indexed. All of the aliases, along with the default name, are also copied to a single dynamic field called names and the boost for each value is set according to the alias type. Anything ending in _V is assigned a score of 0.5; anything ending in _N a score of 2.0; everything else is left unchanged (the default boost value being 1.0). The types aren't officially documented anywhere on the GeoPlanet site but this is what I've been able to learn about them so far:

- N is a preferred local name

- P is a preferred English name

- Q is a preferred name (in other languages)

- V is a valid variant name that is unpreferred

- S — dunno

- A — dunno

The idea isn't that you search for a particular alias by trying to divine what language and type it's been assigned but rather that you just query the names field, which contains all the possibilities, and sort out the language stuff on your own. Like this:

# Don't laugh, there's a town called Poo in Spain... # # /select?q=name:poo+OR+names:Londra&fl=name,woeid,alias_ITA_Q <result name="response" numFound="4" start="0"> <doc> <str name="name">Poo</str> <int name="woeid">770487</int> </doc> <doc> <str name="name">Poo</str> <int name="woeid">770486</int> </doc> <doc> <str name="name">Fernando Poo Islote</str> <int name="woeid">12465615</int> </doc> <doc> <str name="name">London</str> <int name="woeid">44418</int> <arr name="alias_ITA_Q"> <str>Londra</str> </arr> </doc> </result>

This is not a geocoder. The Solr community is talking about adding geocoding support to version 1.5 but until then if you need a geocoder take a look at the work that Schuyler and FortiusOne have been doing. There might be some ways to make Solr play a geocoder on TV between now and version 1.5 but it's definitely not going to work using anything I've done to date. In the short-term it would probably be worth generating, and indexing, a fully qualified name (city, state, country; that sort of thing) for each place because if you search for

the only match is WOE ID 26332791 (Universite du Quebec a Montreal UQAM) and not WOE ID 3534 (the city of Montreal). Baby steps.Montreal Quebec

Another thing that would probably be useful is storing the complete list of ancestors for each WOE ID. One way to do that would be to add them as machine tags. Something like a multivalue field that contained woe:PLACETYPE=WOEID strings that could also be used to populate (using Solr's copyField magic) separate namespace, predicate and value fields (more on that below). If the order of stuff added to a multi-value field is always preserved that might work but I haven't done enough poking to say for sure.

Version 7.4.0 of the GeoPlanet dataset shipped with a list of WOE IDs that, for one reason or another, have been deprecated and replaced by a newer WOE ID. The import.py script will check to see whether the data has been added to your SQLite database. If it has then for each WOE ID (in the places.tsv file) an additional lookup will be done to identify and store any WOE IDs it supercedes (in a multi-value field called surprising enough supercedes_woeid). Every ID that's been superceded will also be updated to reflect the WOE ID that replaces it. If no record for the WOE ID that's being superceded then a stub record is created that contains two fields: woeid, supercededby_woeid. That way, if someone passes you a WOE ID that's been deprecated you can either return the pointer immediately or include the provenance (backwards or forwards) for the data you do return.

That's all great except for the part where the GeoPlanet data doesn't have any coordinate data associate with it. Of course there are ways to get it anyway but I didn't mention that, did I? Instead, I added some code to read in the Flickr Shapefiles (Public Dataset). So far, only the most recent (not a donut hole) shapefile is used to calculate the centroid for a WOE ID. The bounding box is also stored as a set of individual fields: sw_latitude, sw_longitude, ne_latitude, ne_longitude. I'm not sure that's the right thing to do, long-term. There's an interesting paper about using Hadoop to do GIS processing where coordinate data is stored using the Well Known Text serialization which might be worth trying. Eventually, it was all starting to smell like yaks so I opted for the simplest thing you could actually query today (I am not quite ready to cross the river and start writing my own query plugins in Java just yet) and moved on.

Once you've got a copy of the shape data, importing it looks like this:

python import_flickr_shapefiles.py --solr http://localhost:8983/solr/geoplanet \ --flickr /path/to/flickr_shapefiles_public_dataset_1.0.1.xml

There are about 5.3 million WOE IDs in the GeoPlanet dataset and only about 175, 000 shapefiles (some of which are ignored because they make the library used to parse them cry) which is at least a start. Coverage will be good in major European and North American cities and probably a bit weird everywhere else which is the nature of the beast. There are lots of other datasets out there and the only thing that's needed is a way to determine a matching WOE ID.

I've put everything up on Github:

http://github.com/straup/solr-geoplanet

This includes the various config files that Solr uses and the import scripts described here, along with a modified version of pysolr (to support boost values when calling the add method) because I haven't gotten around to submitting a patch. There's a README file that walks through the basics of getting everything set up but assumes that you've already spent a little bit of time reading the Solr documentation. One of the nicest things about getting to know Solr is that the community has done a fabulous job documenting it. I've got a bunch of bookmarks on del.icio.us and the Solr book is actually really useful.

Everything uses the standard example

application that ships with Solr, including the admin interfaces for adding and removing documents. Unless you actually know how to configure Java web server thingies you should not deploy this anywhere within sight of the public. (The idea is usually that you run Solr on an internal port and have your application talk to it locally over HTTP and fuss with the results before handing them back to users.) Tangentially related is the part where Jetty, the Java web server thingy used by Solr, just grew support for WebSockets which might prove to be fun and exciting. In the meantime I will just hope for friendlier Jetty documentation...

Finally:

I mentioned machine tags before. They were the catalyst for nosing around with Solr. I am doing a workshop on machine tags at Museums and the Web, next year, and the Department of Talk is Cheap has mandated that I be able to demonstrate a functional machine tag store that people can use without having to work at Flickr. I've got the shape of it working including the ability to (finally) do range queries as part of the solr-flickr project, which is also on Github.

I mention it because the whole tag/machine tag thing seems like a good stack of data to squirt into a Solr-enabled GeoPlanet. There are plenty of places to pull that data from on Flickr: Doing a straight-up search for a WOE ID; Indexing the top tags (and clusters) for a WOE ID (that's how most of the I See DOTS images were created); checking for machine tags that are known to have geo data and then fetching second and third order tags for photos added with recent values

(for a given machine tag). The list goes on and indexing and sorting all those little bits of text is precisely the kind of thing that Solr is good at. Maybe we can build the alpha shape equivalent of a geocoder, whatever that means.

In the end it might be easiest to feed all of that data to Solr using a bunch of (languages that start with P) scripts but Solr does support the notion of data import handlers. This includes stuff like database connections or any old URL that can retrieved from the Internets and parsed with XPath. Since most of the stuff I've described above doesn't need to be authenticated it should be as easy as building the query URI and assigning it as the HttpDataSource

. This approach relies on the endpoint you're calling have default date limits (say, the last n hours) which the Flickr API does in most cases. All you need to do is set up a cron job to call the delta-import command (read: HTTP GET) at set intervals and your GeoPlanet dataset will be updated automagically, modulo any thoughts about how to deal with multiple instances of the same tag or how to calculate boost values for terms.

I'm just saying...

This blog post is full of links.

#woelr